Next month, Meta assumes the chair of the Global Internet Forum to Counter Terrorism (GIFCT)’s Operating Board. GIFCT is an NGO that brings together technology companies to tackle terrorist content online through research, technical collaboration and knowledge sharing. Meta is a founding member of GIFCT, which was established in 2017 and has evolved into a nonprofit organization following the 2019 Christchurch Call, which brings together member companies, governments and civil society organizations to tackle terrorist and violent extremist content online.

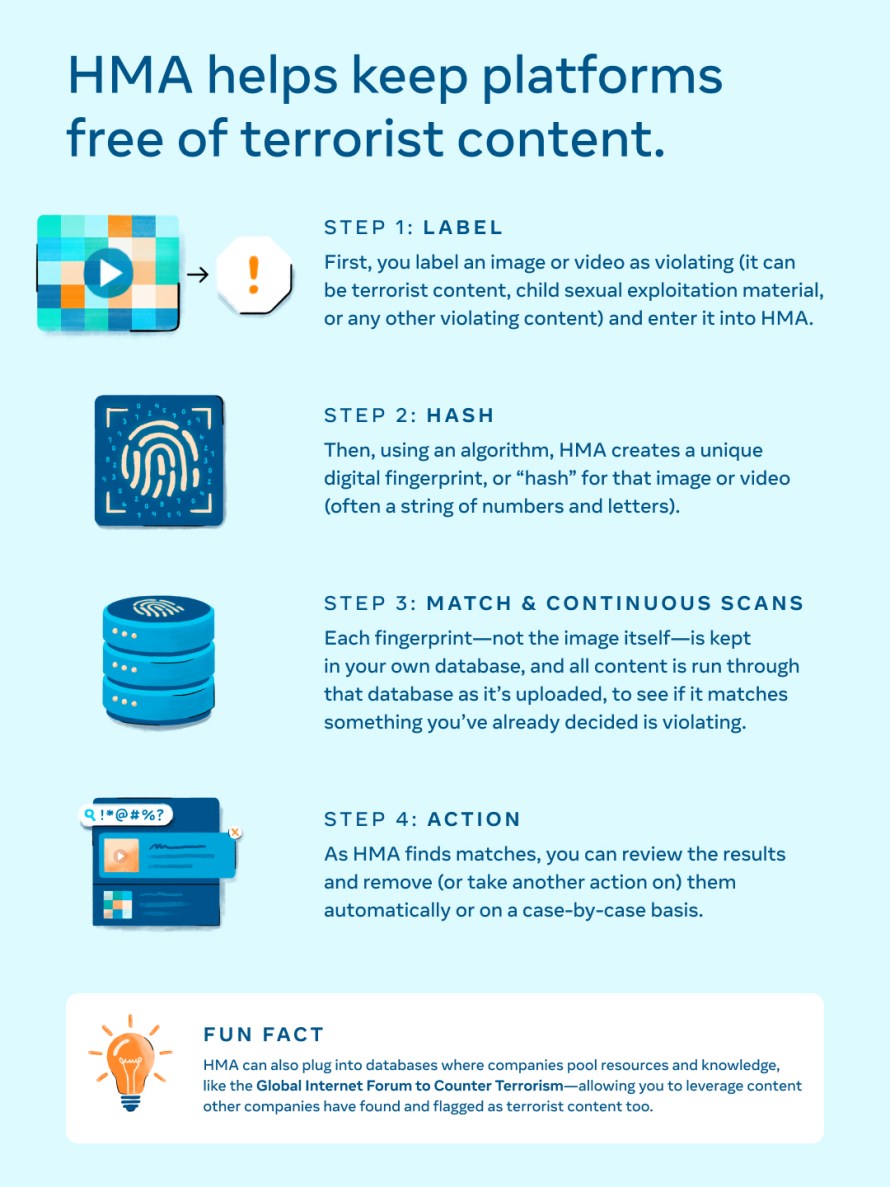

Meta is also making available a free open source software tool it has developed that will help platforms identify copies of images or videos and take action against them en masse. We hope the tool — called Hasher-Matcher-Actioner (HMA) — will be adopted by a range of companies to help them stop the spread of terrorist content on their platforms, and will be especially useful for smaller companies who don’t have the same resources as bigger ones. HMA builds on Meta’s previous open source image and video matching software, and can be used for any type of violating content.

Member companies of the GIFCT often use what’s called a hash sharing database to help keep their platforms free of terrorist content. Instead of storing harmful or exploitative content like videos from violent attacks or terrorist propaganda, GIFCT stores a hash, or unique digital fingerprint for each image and video. The more companies participate in the hash sharing database the better and more comprehensive it is — and the better we all are at keeping terrorist content off the internet, especially since people will often move from one platform to another to share this content. But many companies do not have the in-house technology capabilities to find and moderate violating content in high volumes, which is why HMA is a potentially valuable tool.

Meta spent approximately $5 billion globally on safety and security last year, and has more than 40,000 people working on it. Within that, we have a team of hundreds of people dedicated to counter-terror work specifically, with expertise ranging from law enforcement and national security, to counterterrorism intelligence and academic studies in radicalization.

Meta’s commitment to tackling terrorist content is part of a wider approach to protecting users from harmful content on our services. We’re a pioneer in developing AI technology to remove hateful content at scale. Hate speech is now viewed two times for every 10,000 views of content on Facebook, down from 10-11 times per 10,000 views less than three years ago. We also block millions of fake accounts every day so they can’t spread misinformation, and have taken down more than 150 networks of malicious accounts worldwide since 2017.

We’ve learned over many years that if you run a social media operation at scale, you have to create rules and processes that are as transparent and evenly applied as possible. That’s why we have detailed Community Standards setting out what isn’t acceptable on our services, which are published openly and reviewed consistently. We publish reports every quarter detailing the progress we’re making on things like detecting hate speech and other harmful content, the malicious networks we disrupt, and showing what content is the most viewed on our services.

Of course, we’re not perfect, and fair-minded people will disagree as to whether the rules and processes we have are the right ones. But we take these issues seriously, try to act responsibly and transparently, and invest huge amounts in keeping our platform safe. Many of these issues go way beyond any one company or institution. No one can solve them on their own, which is why cross-industry and cross-government collaborations like GIFCT and the Christchurch Call are so important.

The post Meta Launches New Content Moderation Tool as It Takes Chair of Counter-Terrorism NGO appeared first on Meta.

source https://about.fb.com/news/2022/12/meta-launches-new-content-moderation-tool/

0 Comments